AI Dubbing Video Maker

Generate professional dubbed videos from text in minutes. Agent Opus is an AI dubbing video maker that transforms scripts, prompts, or blog posts into complete videos with cloned voices, AI avatars, and dynamic motion graphics. No recording studio, no voice actors, no manual editing. Describe your video, choose your voice and language, and publish instantly to TikTok, YouTube, LinkedIn, or anywhere your audience lives.

Explore what's possible with Agent Opus

Reasons why creators love Agent Opus' AI Dubbing Video Maker

Consistent Brand Sound

Maintain the same vocal identity and messaging quality in every language so your brand feels cohesive no matter where viewers watch.

Global Reach Overnight

Turn one video into dozens of language versions so your content connects with audiences worldwide without reshoots or studio time.

Launch-Ready in Minutes

Skip weeks of translation coordination and post-production so you can publish multilingual content while ideas are still fresh and trending.

Budget-Friendly Expansion

Eliminate costly voice actors and localization teams so you can scale internationally without burning through your production budget.

Your Voice, Every Language

Keep your authentic tone and personality intact across all dubbed versions so viewers feel like you're speaking directly to them.

Effortless Workflow Integration

Generate dubbed versions without learning complex software or managing multiple vendors so you stay focused on creating great content.

How to use Agent Opus’ AI Dubbing Video Maker

1

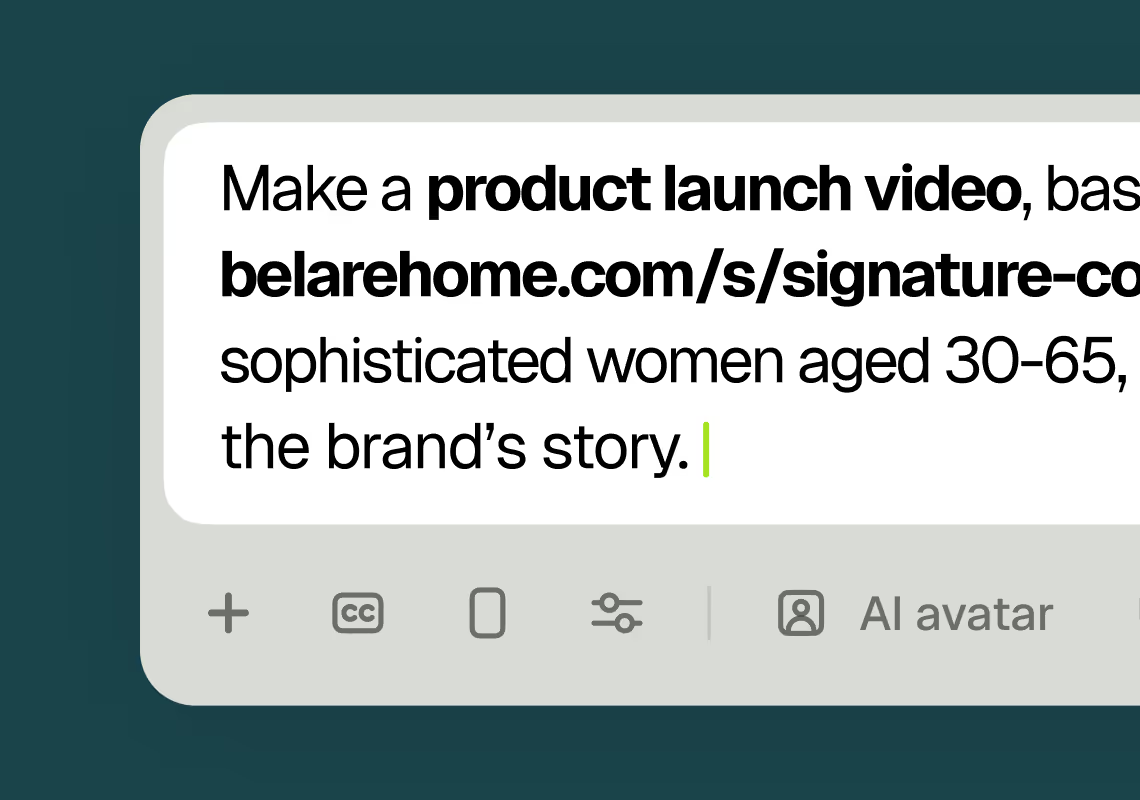

1Describe your video

Paste your promo brief, script, outline, or blog URL into Agent Opus.

2

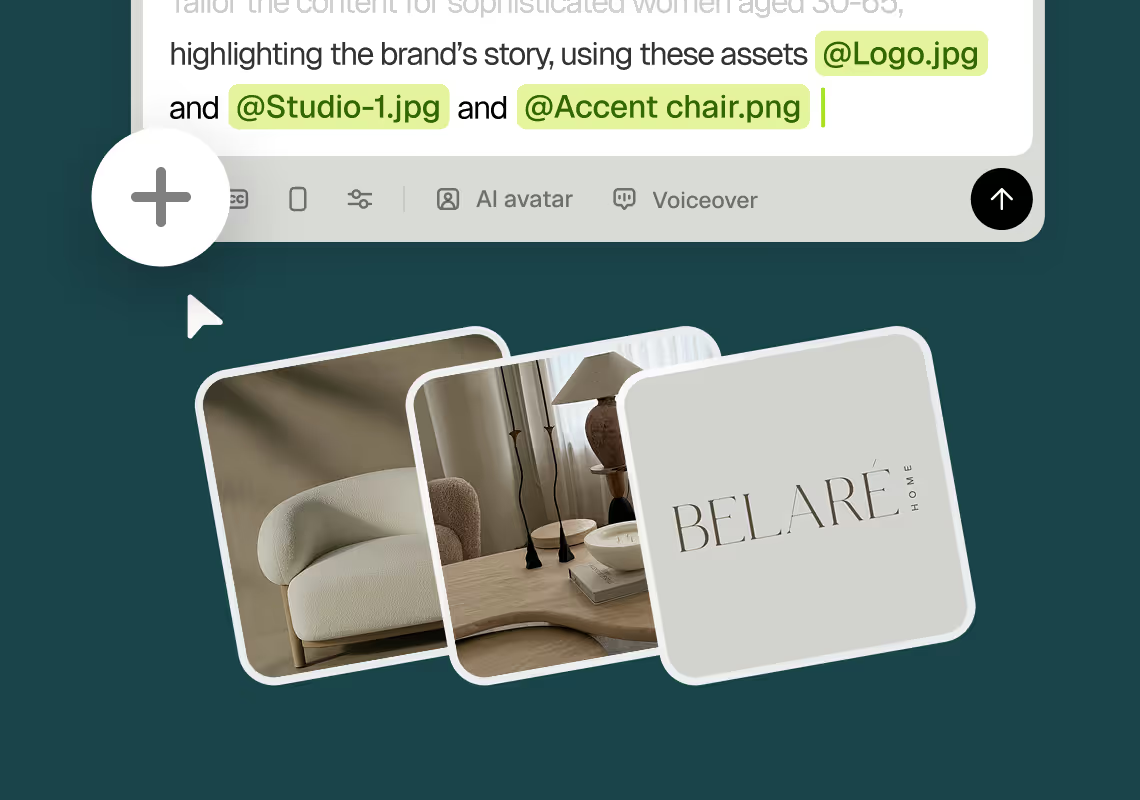

2Add assets and sources

Upload brand assets like logos and product images, or let the AI source stock visuals automatically.

3

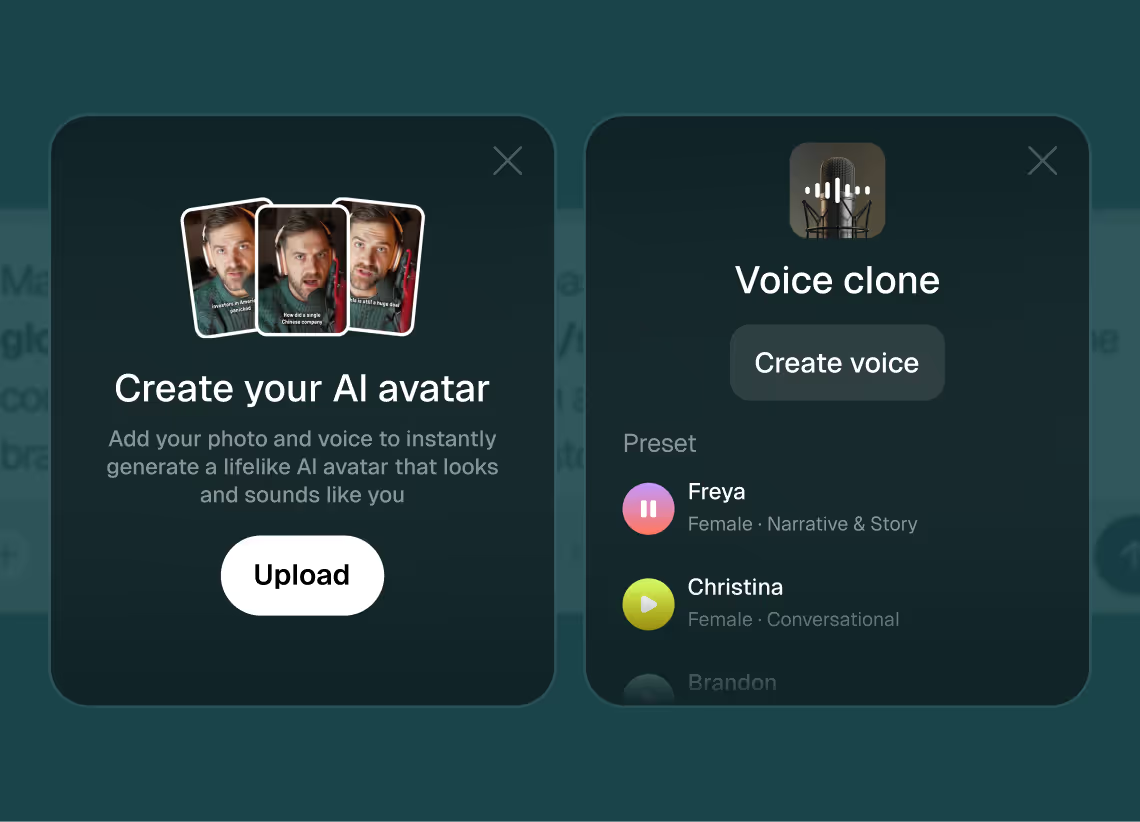

3Choose voice and avatar

Choose voice (clone yours or pick an AI voice) and avatar style (user or AI).

4

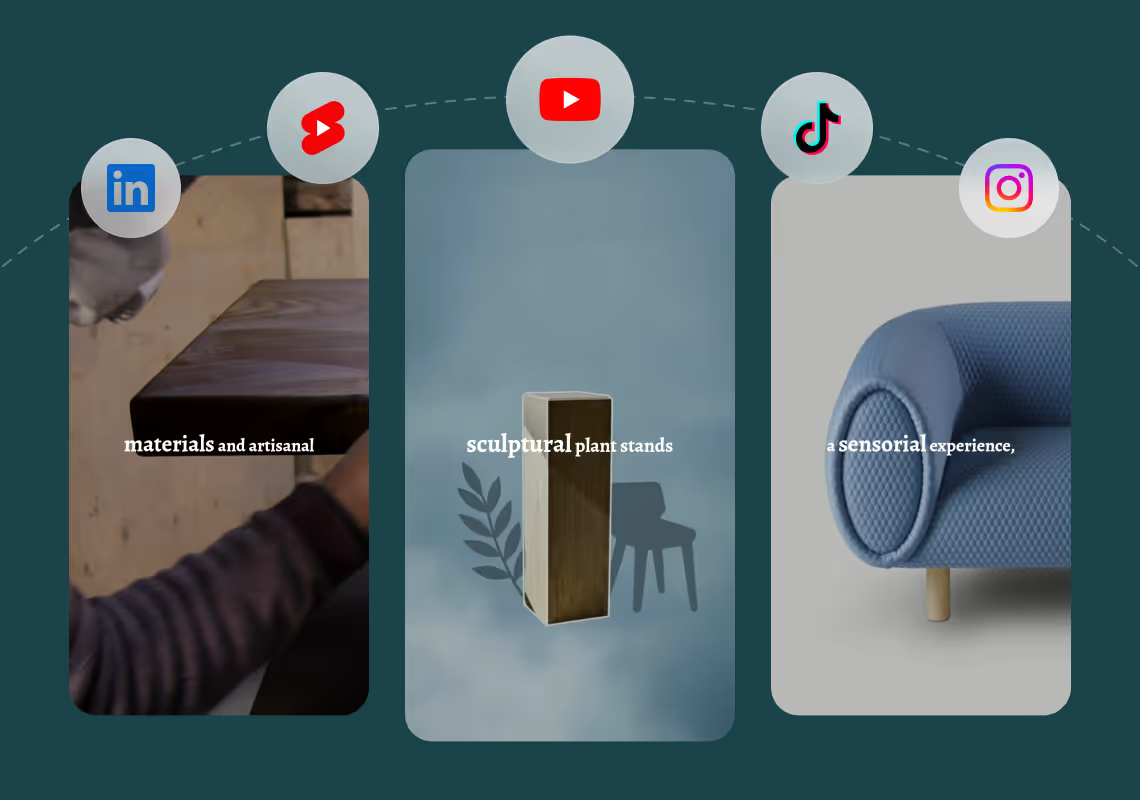

4Generate and publish-ready

Click generate and download your finished promo video in seconds, ready to publish across all platforms.

8 powerful features of Agent Opus' AI Dubbing Video Maker

Lip-Sync Accuracy

Match dubbed voiceovers to on-screen speaker movements for natural, believable multilingual videos.

Preserve Brand Voice

Maintain consistent tone, pacing, and personality across all dubbed language versions of your content.

Batch Dubbing Generation

Create multiple language versions of the same video simultaneously to accelerate global content rollout.

Voice Cloning for Dubbing

Clone your original speaker's voice and apply it across translated audio tracks automatically.

Automated Timing Alignment

AI adjusts dubbed speech length and pauses to match original video pacing without manual editing.

High-Fidelity Audio Output

Produce studio-quality dubbed voiceovers that sound natural and professional in every target language.

Instant Translation Workflow

Upload your video script or audio and receive dubbed versions in target languages within minutes.

Multi-Language Voice Dubbing

Generate videos with AI-dubbed audio in dozens of languages while preserving original tone and emotion.

Testimonials

Awesome output, Most of my students and followers could not catch that it was using Agent Opus. Thank you Opus.

Wealth with Gaurav

This looks like a game-changer for us. We're building narrative-driven, visually layered content — and the ability to maintain character and motion consistency across episodes would be huge. If Agent Opus can sync branded motion graphics, tone, and avatar style seamlessly, it could easily become part of our production stack for short-form explainers and long-form investigative visuals.

srtaduck

wow that's actually great 🔥

irtaza65

Frequently Asked Questions

How does AI dubbing video maker handle voice cloning across different languages?

Agent Opus uses advanced voice cloning technology to capture the unique characteristics of your voice, including tone, pitch, rhythm, and delivery style, then applies those characteristics to dubbed audio in your target languages. When you provide a voice sample or record directly in the platform, the AI analyzes hundreds of vocal features to build a voice model. This model can then generate speech in multiple languages while maintaining your recognizable vocal signature. The process works differently than simple translation and text-to-speech. Agent Opus does not just translate words and read them in a generic voice. Instead, it preserves the emotional nuance, pacing, and personality of the original speaker across language boundaries. For example, if your English voice has a warm, conversational tone with slight pauses for emphasis, the Spanish or French dubbed version will carry those same qualities. This creates a consistent brand voice across all your international content. The AI also handles phonetic challenges automatically. Different languages have different sound patterns, mouth shapes, and rhythm structures. Agent Opus adjusts the dubbed audio to sound natural in each target language while keeping your core vocal identity intact. You can generate dubbed videos in languages you do not speak fluently, and the output will sound like a native speaker with your voice characteristics. For creators and marketers expanding into global markets, this means you can maintain personal connection with international audiences without hiring voice actors for each language or learning multiple languages yourself. The voice cloning works from short samples, typically just a few minutes of clear audio, and improves as you generate more content. Agent Opus also offers a library of professional AI voices if you prefer not to clone your own voice, with options for different ages, genders, accents, and speaking styles across dozens of languages.

What are best practices for scripts when using AI dubbing video maker for multiple languages?

Writing scripts for AI dubbing video maker requires thinking about how language structure and cultural context affect video pacing and visual sync. Start with clear, conversational language in your source script. Avoid idioms, slang, or culturally specific references that do not translate well. Phrases like "hit it out of the park" or "piece of cake" may confuse international audiences or require awkward workarounds in dubbed versions. Instead, use direct language that conveys your meaning literally. For example, say "achieve excellent results" rather than "knock it out of the park." Keep sentences relatively short and focused on one idea each. Long, complex sentences with multiple clauses can become tangled in translation and make dubbed audio harder to sync with visuals. Aim for sentences under 20 words when possible. This also helps viewers process information more easily, especially when watching in a non-native language. Consider text expansion and contraction across languages. Some languages require more words or syllables to express the same idea. German and Finnish often run longer than English, while Chinese and Japanese can be more compact. Agent Opus adjusts pacing automatically, but you can help by leaving natural pauses in your script and avoiding wall-to-wall narration. Build in visual moments where no voiceover is needed, giving the AI room to adjust timing across language versions without feeling rushed or dragging. Use active voice and strong verbs. Passive constructions and weak verbs translate poorly and sound unnatural in many languages. "We built this feature" is clearer and more direct than "This feature was built by us." Active voice also creates more engaging dubbed audio with better rhythm and energy. Include pronunciation guides for brand names, product names, or technical terms that should sound consistent across all languages. Agent Opus can maintain specific pronunciations when you flag them, ensuring your brand identity stays intact in every dubbed version. Test your script by reading it aloud before generating dubbed videos. If it sounds awkward or unnatural when you speak it, it will sound worse in dubbed form. Conversational, speakable language always works best for AI dubbing video maker applications.

Can AI dubbing video maker maintain brand consistency across different language versions?

Agent Opus is built specifically to maintain brand consistency when generating dubbed videos across multiple languages. The platform treats your brand assets, visual style, and messaging as core elements that remain constant while only the language and dubbed audio change. When you create a dubbed video project, you can upload your logo, brand colors, product images, and other visual assets once, and Agent Opus applies them consistently across all language versions. This means a viewer in Spain sees the same logo placement, color scheme, and product shots as a viewer in Japan, with only the dubbed voiceover and any on-screen text adapted to their language. The AI motion graphics system maintains the same visual rhythm and style across languages, even when dubbed audio timing varies slightly. If your English version has a dynamic product reveal at the 15-second mark, the French and German versions will feature the same reveal, adjusted naturally to match the pacing of the dubbed narration in those languages. Visual storytelling stays consistent while accommodating linguistic differences. For voice consistency, Agent Opus lets you use the same cloned voice across all language versions, or select AI voices that match in tone and energy level. If your brand voice is warm and friendly in English, you can ensure the Japanese and Portuguese dubbed versions carry that same warmth through voice selection and delivery style settings. The platform also maintains consistent messaging hierarchy across languages. Your key value propositions, calls to action, and brand promises appear in the same visual and temporal positions across all dubbed versions. This creates a cohesive brand experience regardless of which language version a viewer encounters. For teams managing multi-language content, Agent Opus provides templates and style presets that lock in your brand guidelines. Once you define your visual style, voice settings, and pacing preferences, every dubbed video you generate follows those rules automatically. This eliminates the inconsistency that often happens when different translators, voice actors, and editors work on separate language versions without tight coordination. The result is a library of dubbed videos that feel like they came from the same creative team, even though they were generated automatically in minutes rather than produced manually over weeks.

How does AI dubbing video maker handle visual sync when dubbed audio length varies across languages?

Agent Opus uses intelligent scene pacing and visual assembly to keep dubbed videos feeling natural even when audio length varies significantly across languages. The AI analyzes the semantic content and emotional beats of your script, then builds visual sequences that flex to accommodate different dubbed audio durations without feeling rushed or dragging. When you generate a dubbed video, Agent Opus first processes your script in all target languages to understand the timing differences. Some languages will run longer, others shorter. The AI then creates a visual structure with flexible timing zones. Key moments like product reveals, calls to action, or emotional peaks are locked to specific points in the narrative, while transitional scenes and supporting visuals can expand or contract to match the dubbed audio length in each language. For example, if your English script runs 45 seconds and the German dubbed version runs 52 seconds due to language structure, Agent Opus does not simply slow down the entire video. Instead, it identifies scenes that can naturally hold longer, such as product shots, lifestyle footage, or motion graphics sequences, and extends those by a few frames or seconds. The pacing feels intentional, not stretched. Similarly, if the Japanese dubbed version runs only 38 seconds, the AI tightens transitions and reduces hold times on non-critical visuals, maintaining energy without feeling frantic. The platform also uses visual techniques to mask timing differences. Dynamic motion graphics, layered animations, and scene transitions create visual interest that makes slight pacing variations imperceptible to viewers. A viewer watching the Spanish dubbed version has no idea the English version is seven seconds shorter because the visual storytelling feels complete and purposeful in both. For videos with AI avatars, Agent Opus syncs lip movements and gestures to the dubbed audio in each language. The avatar appears to speak naturally in German, French, or Mandarin, with mouth shapes and timing that match the phonetic patterns of each language. This is not a simple overlay, the AI generates language-specific avatar performances that look authentic. The system also handles cultural pacing preferences. Some cultures prefer faster, more energetic delivery, while others favor slower, more contemplative pacing. Agent Opus can adjust visual rhythm to match these preferences while keeping your core message and brand identity consistent across all dubbed versions.