AI Lip Sync Video Generator

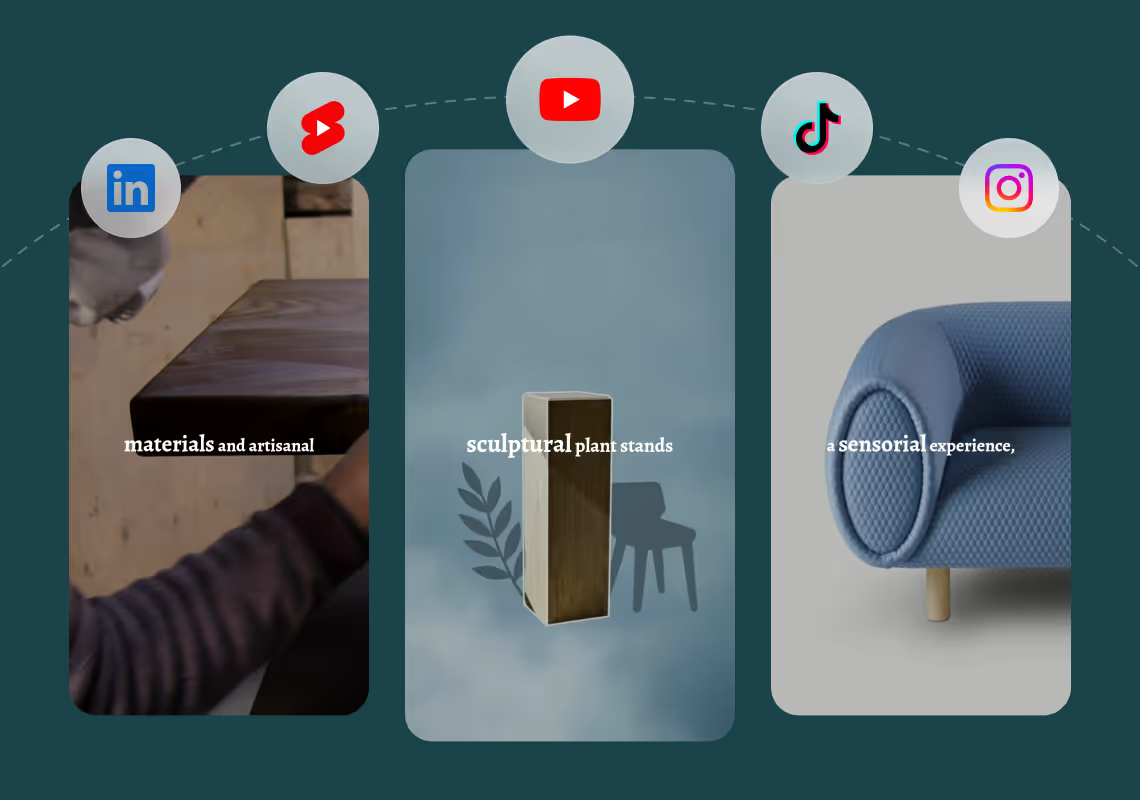

Agent Opus creates AI lip sync video from any text input. Describe your message, paste a script, or upload a blog URL, and get a finished talking-head video with synchronized mouth movements, voice, and motion graphics. No manual syncing, no editing timeline, no rendering delays. Your AI avatar or cloned voice speaks your words with perfect lip alignment, ready to publish on TikTok, Instagram Reels, YouTube Shorts, or LinkedIn. One prompt delivers one complete video.

Explore what's possible with Agent Opus

Reasons why creators love Agent Opus' AI Lip Sync Video Generator

Repurpose Content Instantly

Turn one recording into multiple versions for different platforms, audiences, or campaigns without starting from scratch.

Scale Without Burnout

Create dozens of personalized videos in the time it used to take for one, freeing you to focus on strategy and growth.

Perfect Sync Every Time

Never worry about mismatched audio again—your lips move naturally with every word, accent, and pause.

Scale Content Effortlessly

Create dozens of personalized video variations from one recording, each perfectly lip-synced to different scripts.

Fix Mistakes in Seconds

Update dialogue or correct errors without re-recording footage, saving hours of production time.

Launch-Ready in Minutes

Skip studio costs and lengthy editing sessions while delivering perfectly synced videos that look professionally produced.

How to use Agent Opus’ AI Lip Sync Video Generator

1

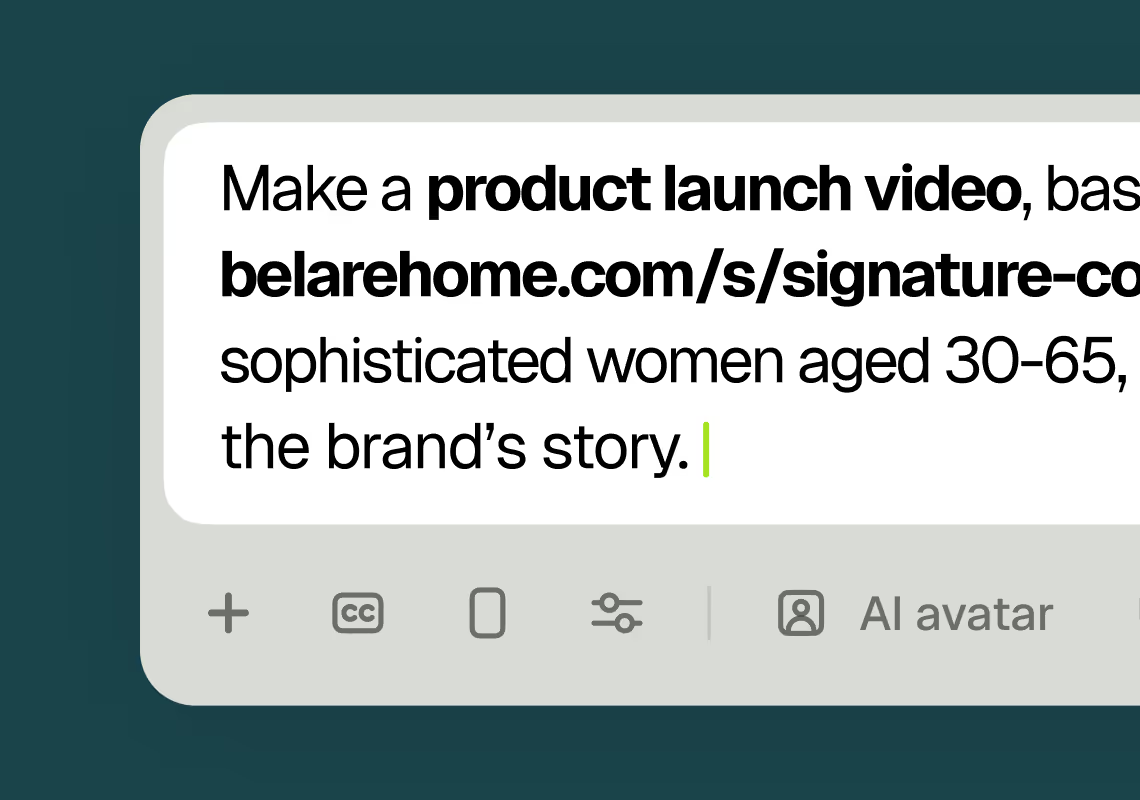

1Describe your video

Paste your promo brief, script, outline, or blog URL into Agent Opus.

2

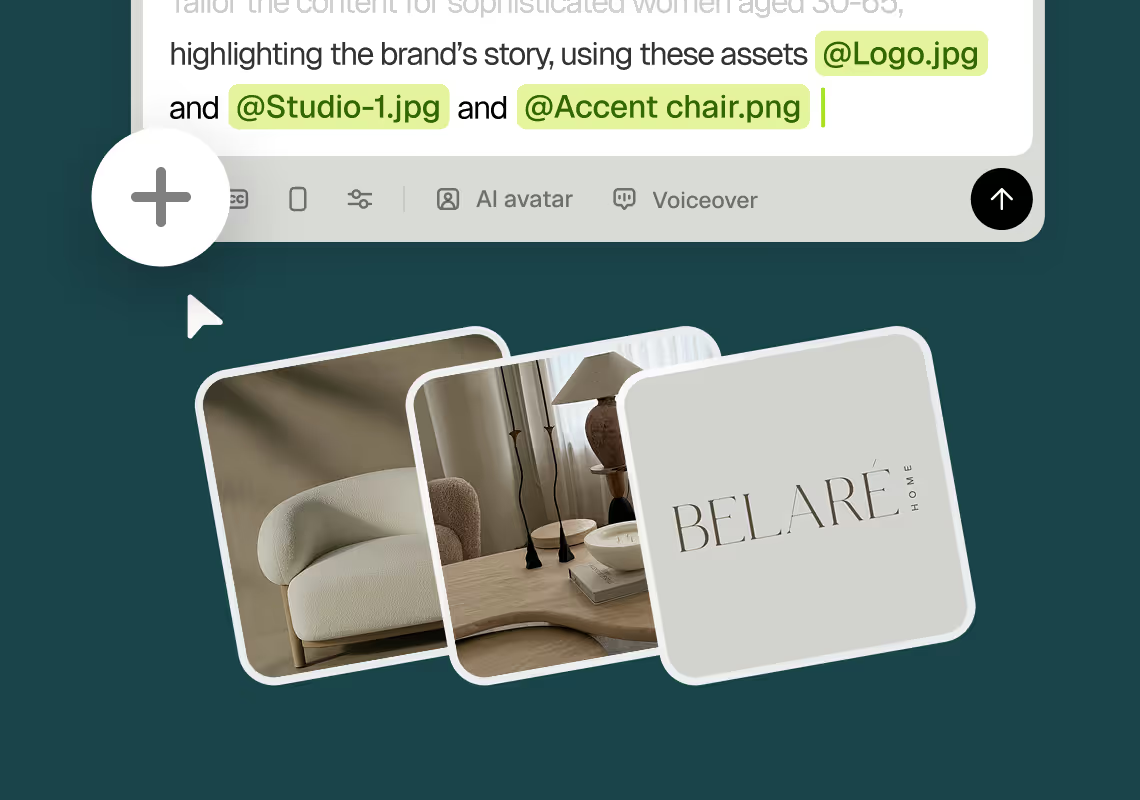

2Add assets and sources

Upload brand assets like logos and product images, or let the AI source stock visuals automatically.

3

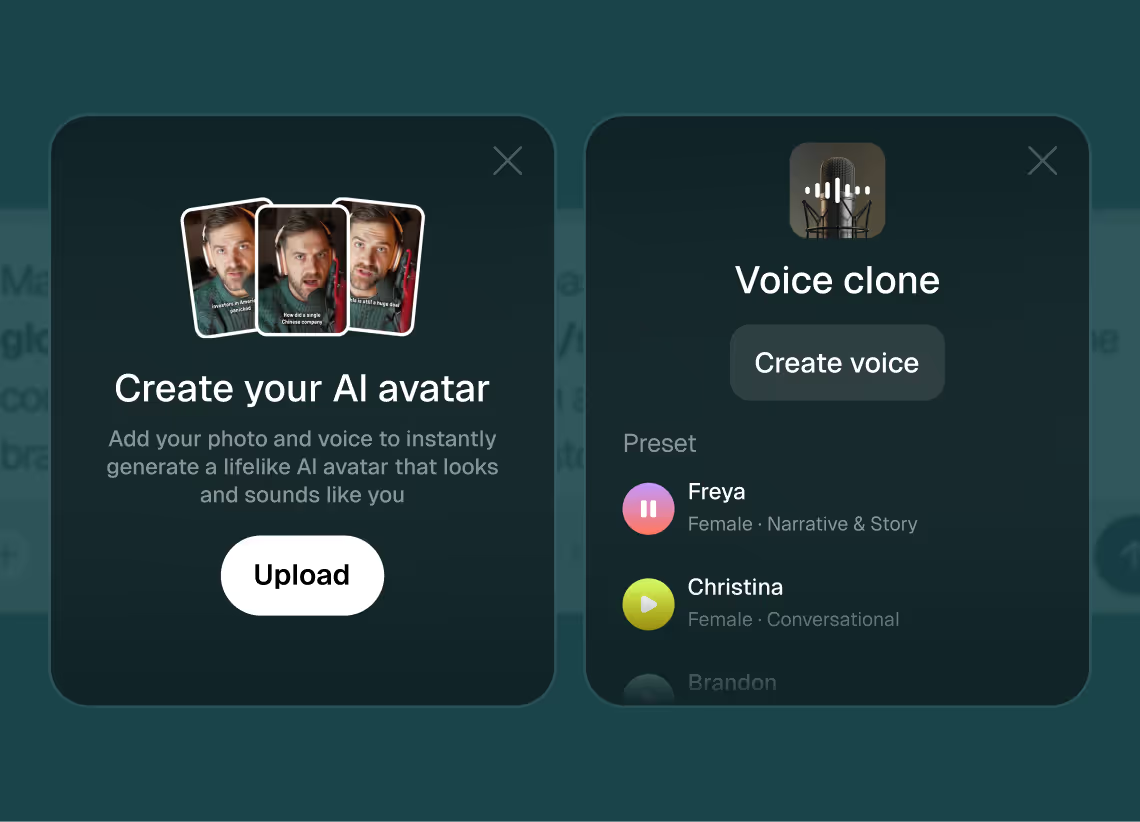

3Choose voice and avatar

Choose voice (clone yours or pick an AI voice) and avatar style (user or AI).

4

4Generate and publish-ready

Click generate and download your finished promo video in seconds, ready to publish across all platforms.

8 powerful features of Agent Opus' AI Lip Sync Video Generator

Instant Avatar Sync

Type a script and Agent Opus generates a video with synchronized avatar speech instantly.

Custom Voice Integration

Upload your own audio and watch AI avatars lip sync perfectly to your narration.

Multi-Language Sync

Create lip-synced videos in dozens of languages with accurate phoneme matching.

Professional Presenter Videos

Produce polished talking-head videos with flawless lip sync for training or marketing.

Realistic Lip Sync

Generate videos where avatar mouths move naturally in sync with your audio script.

No Recording Required

Skip filming and generate fully lip-synced presenter videos from text alone.

Brand-Consistent Avatars

Choose or customize avatars that lip sync on-brand messaging across all your videos.

Emotion-Matched Expressions

Avatars display facial expressions that align with the tone of your synced dialogue.

Testimonials

Awesome output, Most of my students and followers could not catch that it was using Agent Opus. Thank you Opus.

Wealth with Gaurav

This looks like a game-changer for us. We're building narrative-driven, visually layered content — and the ability to maintain character and motion consistency across episodes would be huge. If Agent Opus can sync branded motion graphics, tone, and avatar style seamlessly, it could easily become part of our production stack for short-form explainers and long-form investigative visuals.

srtaduck

I reviewed version a and I was very impressed with this version, it did very well in almost all aspects that users need, you would only have to make very small changes and maybe replace one of 2 of the pictures, but even saying that it could be used as is and still receive decent views or even chances at going viral depending on the story or the content the user chooses.

Jeremy

all in all LOVE THIS agent. I'm curious to see how I can push it (within reason) Just need to learn to get the consistency right with my prompts

Rebecca

Frequently Asked Questions

How does AI lip sync video handle different voice styles and accents?

Agent Opus analyzes the phonetic structure of your audio regardless of accent, speaking speed, or vocal tone. When you clone your voice or select an AI voice, the system maps each phoneme (the smallest unit of speech sound) to a corresponding mouth shape, or viseme. This phoneme-to-viseme mapping works across languages and accents because it operates at the sound level, not the word level. If you speak with a regional accent, the AI detects the actual sounds you produce and syncs the avatar's mouth to match those exact pronunciations. For fast speakers, the system adjusts the timing of each mouth shape to keep pace with rapid syllables. For slow, deliberate delivery, it extends the duration of each viseme so the lips never appear to lag or rush ahead of the audio. The result is natural lip sync that respects your unique vocal characteristics. You can test this by generating videos with different voice clones or AI voices and comparing the mouth movement. Each will sync accurately because the underlying phoneme analysis adapts to the audio input, not a generic template.

What are best practices for writing scripts that produce the most natural AI lip sync video?

Natural lip sync starts with conversational scripts that match how people actually speak. Avoid long, complex sentences with multiple clauses, because they force the avatar to hold mouth shapes for extended periods without natural pauses. Instead, write short sentences with clear subject-verb-object structure. Use contractions like "you're" and "it's" instead of "you are" and "it is," because contractions reflect real speech patterns and produce smoother mouth transitions. Include natural pauses by adding commas or breaking thoughts into separate sentences. This gives the AI cues to close the mouth briefly, mimicking how humans pause to breathe or emphasize a point. Avoid technical jargon or invented words unless you can phonetically spell them, because the AI may mispronounce unfamiliar terms and create mismatched lip movement. If your script includes numbers, write them out as words ("twenty-three" instead of "23") so the voice generator pronounces them correctly and the lip sync follows. Test your script by reading it aloud before generating the video. If it sounds stiff or unnatural when you speak it, the avatar will look stiff too. Agent Opus performs best with scripts that sound like a real person talking to a friend, not reading from a teleprompter.

Can AI lip sync video maintain consistent branding across multiple videos with different scripts?

Yes, Agent Opus lets you upload brand assets like logos, product images, and color palettes that persist across all your AI lip sync video projects. When you generate a new video, the system pulls from your asset library to frame the avatar with consistent visual elements. For example, you can set a default lower-third graphic with your logo and tagline that appears in every video, or define a background template that uses your brand colors and product shots. The avatar itself can be consistent too. If you upload a photo of yourself or a team member, Agent Opus generates a digital version of that face and uses it for every video you create. Pair that with a cloned voice, and every video features the same speaker with the same visual and vocal identity. This consistency matters for building audience recognition. Viewers see the same face and hear the same voice across your TikTok, LinkedIn, and YouTube content, reinforcing your brand even when the script changes. You can also create multiple avatar-voice pairings for different content types. For example, use one avatar for product demos and another for customer testimonials, each with its own cloned voice and background template. Agent Opus saves these configurations so you can switch between them without re-uploading assets or adjusting settings.

What are the limitations or edge cases of AI lip sync video generation?

AI lip sync video works best with clear, conversational speech in widely spoken languages. Edge cases include scripts with heavy background noise in the voice clone, extreme vocal effects like whispering or shouting, or languages with phoneme sets not well-represented in the training data. If you clone your voice from a recording with music or ambient sound, the AI may struggle to isolate the speech phonemes, leading to less precise lip sync. To avoid this, record your voice clone in a quiet environment with a decent microphone. Extreme vocal styles also challenge the system. Whispering reduces the acoustic energy of certain phonemes, making it harder for the AI to detect mouth-shape transitions. Shouting or singing introduces pitch variations that can confuse the phoneme-to-viseme mapping. For best results, use a natural speaking voice at moderate volume. Another edge case is rapid code-switching between languages within a single script. If your script alternates between English and Spanish mid-sentence, the AI may not transition mouth shapes smoothly because each language has different phoneme rules. Stick to one language per video, or separate multilingual content into distinct clips. Finally, very long scripts (over 10 minutes of speech) may produce videos where the avatar's expression becomes static over time. Agent Opus generates micro-expressions and head movements to keep the avatar lifelike, but extended monologues can feel less dynamic than shorter, punchier videos. Break long content into multiple videos to maintain visual interest and give the AI more opportunities to vary the avatar's performance.