AI Talking Avatar Video Generator

Turn any text into a polished AI talking avatar video in minutes. Agent Opus generates complete videos with AI avatars, custom voiceovers, and dynamic motion graphics from a simple prompt, script, or blog URL. No timeline, no editing, no design skills. Just describe what you need, and get a publish-ready video with a professional talking avatar that explains, sells, or teaches. Perfect for product demos, explainer videos, social content, and training materials. Start creating professional AI talking avatar videos today.

Explore what's possible with Agent Opus

Reasons why creators love Agent Opus' AI Talking Avatar Video Generator

On-Brand in Every Frame

Keep your visual identity consistent across every talking avatar video, no matter who's on your team.

Global Reach, Local Feel

Connect with international audiences by letting your avatar speak their language while maintaining your personality and credibility.

Skip Studio Costs Entirely

Create professional talking avatar videos without cameras, lighting, or hiring on-screen talent ever again.

Update Anytime, Instantly

Refresh outdated content or fix mistakes in seconds - no reshoots, no waiting, just click and your avatar delivers the new script.

Scale Content Without Burnout

Produce dozens of talking avatar videos per week while you focus on strategy, not recording sessions.

Reach Global Audiences Effortlessly

Deliver your message in multiple languages with natural-sounding talking avatars that connect across borders.

How to use Agent Opus’ AI Talking Avatar Video Generator

1

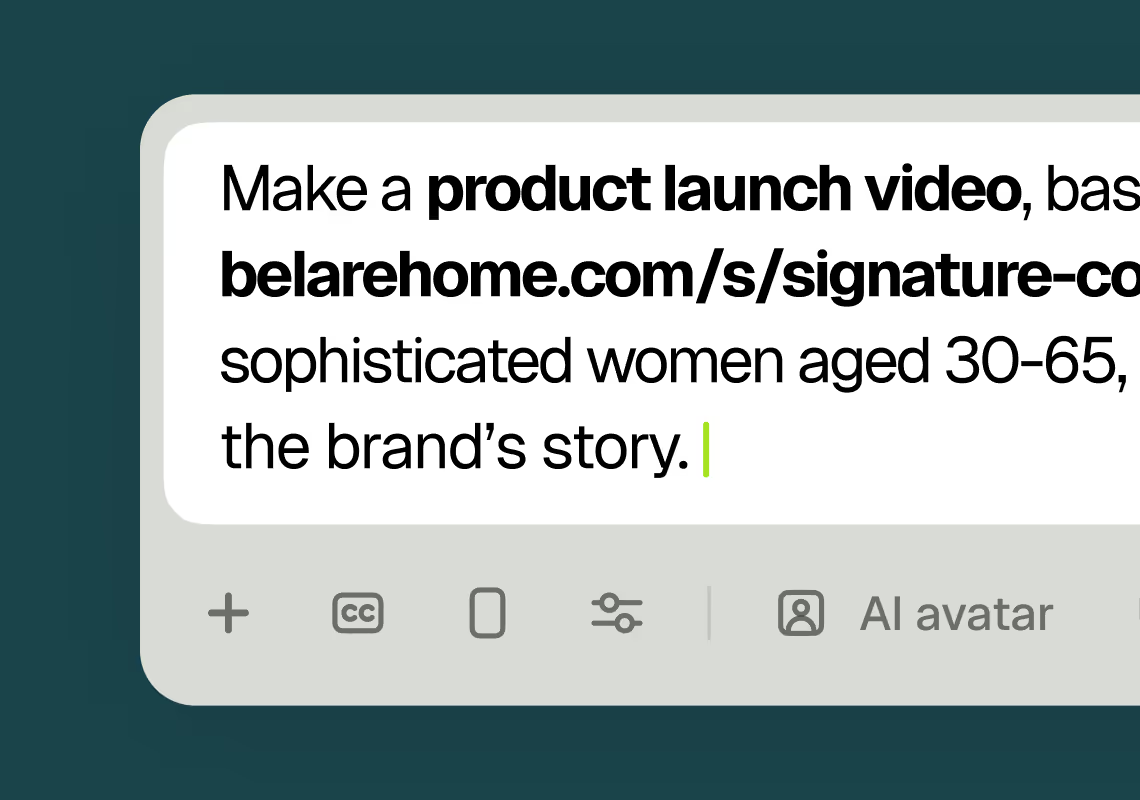

1Describe your video

Paste your promo brief, script, outline, or blog URL into Agent Opus.

2

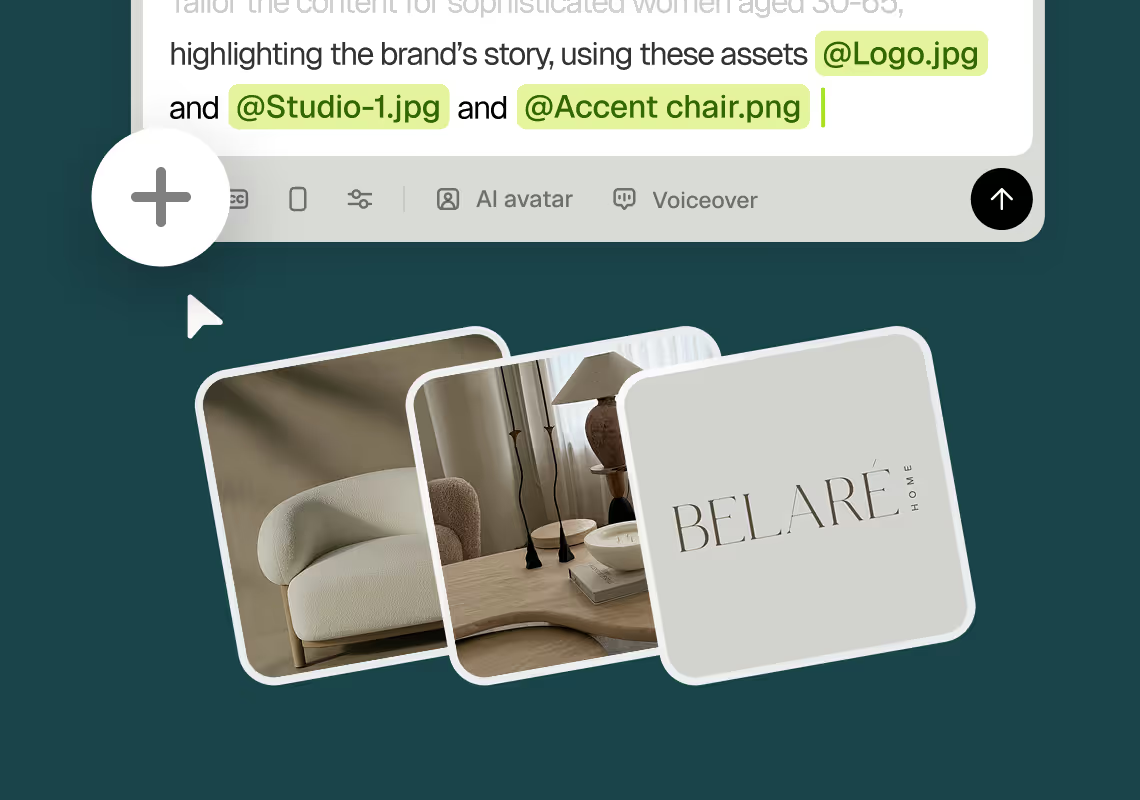

2Add assets and sources

Upload brand assets like logos and product images, or let the AI source stock visuals automatically.

3

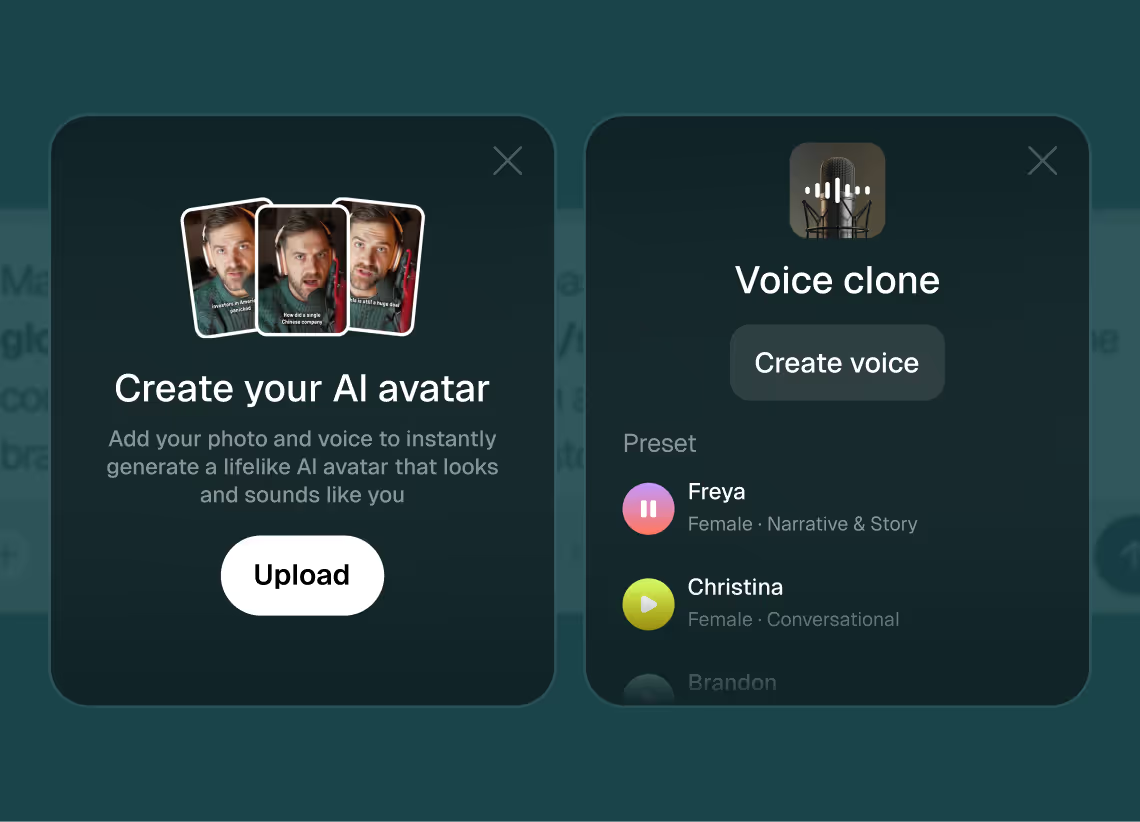

3Choose voice and avatar

Choose voice (clone yours or pick an AI voice) and avatar style (user or AI).

4

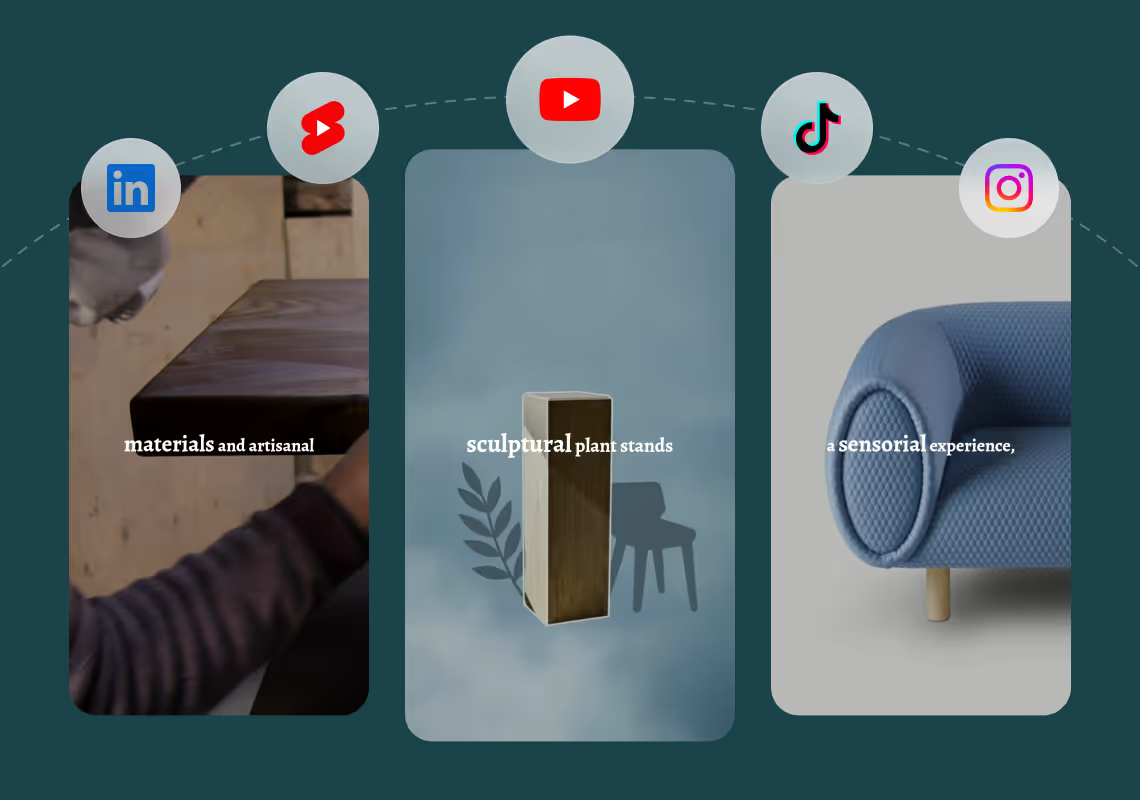

4Generate and publish-ready

Click generate and download your finished promo video in seconds, ready to publish across all platforms.

8 powerful features of Agent Opus' AI Talking Avatar Video Generator

Instant Avatar Generation

Create professional talking avatars from text prompts in seconds without filming or hiring actors.

Realistic Lip Sync

AI matches mouth movements perfectly to your script for natural, believable avatar speech.

Custom Avatar Styles

Choose from diverse avatar appearances and personalities that align with your brand voice.

Emotion Control

Adjust avatar tone and facial expressions to match your message's mood and intent.

Voice Selection

Pair your avatar with AI voices that match gender, age, and accent for authentic delivery.

Multi-Language Avatars

Generate talking avatars speaking dozens of languages with accurate pronunciation and expressions.

Background Customization

Place your talking avatar in branded environments or dynamic scenes that enhance your story.

Script to Avatar Video

Paste your script and watch AI transform it into a polished avatar presentation instantly.

Testimonials

Awesome output, Most of my students and followers could not catch that it was using Agent Opus. Thank you Opus.

Wealth with Gaurav

This looks like a game-changer for us. We're building narrative-driven, visually layered content — and the ability to maintain character and motion consistency across episodes would be huge. If Agent Opus can sync branded motion graphics, tone, and avatar style seamlessly, it could easily become part of our production stack for short-form explainers and long-form investigative visuals.

srtaduck

I reviewed version a and I was very impressed with this version, it did very well in almost all aspects that users need, you would only have to make very small changes and maybe replace one of 2 of the pictures, but even saying that it could be used as is and still receive decent views or even chances at going viral depending on the story or the content the user chooses.

Jeremy

all in all LOVE THIS agent. I'm curious to see how I can push it (within reason) Just need to learn to get the consistency right with my prompts

Rebecca

Frequently Asked Questions

How does AI talking avatar video generation work with different input types?

Agent Opus accepts four input types for AI talking avatar video creation, each optimized for different workflows. First, you can write a simple prompt describing what you want the avatar to say and show. For example, 'Create a 60-second product demo where an AI avatar explains our new scheduling app features.' Agent Opus interprets your intent, generates a script, selects appropriate visuals, and assembles the complete video. Second, you can paste a full script if you've already written your talking points. The system analyzes your script structure, identifies key moments for visual emphasis, and synchronizes the avatar's delivery with motion graphics that reinforce your message. Third, you can provide an outline with bullet points or section headers. Agent Opus expands your outline into a full narrative, determines optimal pacing, and creates scene transitions that maintain viewer engagement. Fourth, you can drop in a blog post or article URL. The AI extracts core concepts, condenses them into video-friendly segments, and generates an AI talking avatar video that transforms written content into visual storytelling. Regardless of input type, the system handles avatar selection based on your brand voice, matches voiceover tone to content type, sources relevant imagery from stock libraries or web searches, and applies motion graphics that guide viewer attention. The key advantage is consistency. Whether you start with three sentences or three thousand words, Agent Opus delivers a cohesive talking avatar video with professional production values. You're not stitching together clips or manually syncing audio. The AI understands narrative flow, visual hierarchy, and platform-specific best practices. For creators who batch content, you can queue multiple inputs and generate a library of AI talking avatar videos in one session, each tailored to specific platforms or audience segments.

What are best practices for prompts when creating AI talking avatar videos?

Effective prompts for AI talking avatar video generation balance specificity with creative flexibility. Start by defining your core objective in one clear sentence. Instead of 'make a video about marketing,' try 'create a 90-second AI talking avatar video where a professional avatar explains three email marketing mistakes and how to fix them.' This gives Agent Opus a concrete goal, target length, and content structure. Next, specify your audience and tone. 'For small business owners, conversational and encouraging' helps the AI select avatar style, voice characteristics, and visual pacing appropriate for that demographic. If you have brand guidelines, include them. 'Use our logo in the lower third, feature our product screenshots, maintain our blue and white color scheme' ensures the AI talking avatar video stays on-brand. For voice, be explicit about what you want. 'Use my cloned voice with an upbeat delivery' or 'select a warm female AI voice with a slight British accent' gives the system clear direction. When describing visuals, focus on concepts rather than technical details. 'Show examples of bad email subject lines with red X marks, then transition to good examples with green checkmarks' communicates intent without requiring you to specify exact animations or timing. Agent Opus translates conceptual descriptions into motion graphics automatically. If you're creating tutorial or explainer content, structure your prompt in steps. 'First, the avatar introduces the problem. Second, explain why it matters with supporting statistics. Third, walk through the solution in three steps. Fourth, end with a clear call to action.' This sequential framework helps the AI pace the video and allocate appropriate screen time to each segment. Avoid over-specifying technical details like frame rates, transitions, or exact timing unless you have specific platform requirements. The system optimizes these automatically based on content type and output format. For social media AI talking avatar videos, mention platform and context. 'Create a TikTok-style video where the avatar delivers quick tips in 15-second bursts' signals fast pacing and vertical format. Finally, iterate based on results. If your first video is too formal, add 'more casual and relatable' to your next prompt. Agent Opus learns from your adjustments and refines output accordingly.

Can AI talking avatar videos maintain consistent branding across multiple videos?

Yes, Agent Opus is built specifically for brand consistency across AI talking avatar video libraries. The system allows you to establish brand parameters once, then apply them automatically to every video you generate. Start by uploading your core brand assets: logo files, product images, brand color codes, and any custom graphics you use regularly. These become part of your asset library that Agent Opus references during generation. For avatar consistency, you can select a specific AI avatar as your brand representative and lock it in for all videos. Whether you're creating product demos, tutorials, or social content, the same avatar appears, building recognition and trust with your audience. Voice consistency works the same way. Clone your voice once, and every AI talking avatar video uses that exact voice profile. This is critical for personal brands where authenticity matters. Alternatively, select a specific AI voice and save it as your brand voice. The system remembers pitch, pace, and tonal characteristics, ensuring every video sounds like it comes from the same source. Visual style consistency extends to motion graphics and scene composition. Agent Opus learns your preferences over time. If you consistently use certain transition styles, color overlays, or text animation patterns, the AI incorporates these into future videos automatically. You're not manually recreating your style for each project. For multi-video campaigns, you can create templates based on successful videos. If you generate an AI talking avatar video that performs well, save its structural elements as a template. Future videos can inherit the same pacing, scene structure, and visual rhythm while adapting content to new topics. This is especially valuable for series content where format consistency keeps viewers engaged. Brand asset integration happens automatically during generation. When Agent Opus sources images or creates motion graphics, it prioritizes your uploaded assets over generic stock. Your product shots appear in relevant scenes, your logo maintains consistent placement, and your brand colors dominate the visual palette. For teams managing multiple brands or clients, you can create separate brand profiles within Agent Opus. Switch between profiles to generate AI talking avatar videos with completely different visual identities, voices, and asset libraries. This prevents cross-contamination and ensures each brand maintains its unique presence. The key advantage is scalability. Once your brand parameters are set, you can generate dozens of AI talking avatar videos without manual quality control. Every video automatically adheres to your guidelines, from avatar appearance to color grading to voice characteristics.

What are the limitations or edge cases of AI talking avatar video generation?

Understanding the boundaries of AI talking avatar video generation helps you set realistic expectations and plan accordingly. First, highly specialized or technical jargon may require additional context in your prompts. If you're creating videos about niche industries with uncommon terminology, provide definitions or examples so Agent Opus can accurately represent concepts visually. The AI is trained on broad knowledge but may need guidance for extremely specialized content. Second, real-time or live-action footage integration has constraints. Agent Opus excels at generating complete videos from text, but if you need to incorporate specific live-action clips you've already filmed, you'll need to provide those as uploaded assets. The system can integrate them into the generated video, but it doesn't create live-action footage from scratch. Third, extreme video lengths require strategic planning. While Agent Opus handles videos from 15 seconds to several minutes effectively, very long-form content like 20-minute webinars may be better approached as multiple shorter segments. The AI optimizes pacing and engagement for typical social and marketing video lengths. Fourth, highly specific avatar customization has practical limits. You can choose from a range of AI avatars and even use custom avatars you provide, but requesting hyper-specific physical characteristics like 'avatar with exactly three freckles on left cheek' exceeds current generation capabilities. Focus on general style, professionalism level, and demographic representation. Fifth, real-time data or live updates aren't supported. If your AI talking avatar video needs to display current stock prices, live poll results, or real-time metrics, you'll need to regenerate the video when data changes. Agent Opus creates static videos based on information available at generation time. Sixth, certain creative styles may require iteration. If you're aiming for a very specific artistic look, like hand-drawn animation or retro VHS aesthetics, your first generation might need prompt refinement. The system excels at professional, modern motion graphics but may need guidance for unconventional styles. Seventh, audio synchronization is optimized for standard speech patterns. If you want the avatar to rap, sing, or deliver content in highly rhythmic or musical formats, results may vary. The system is built for natural speech delivery. Eighth, platform-specific compliance is your responsibility. Agent Opus generates videos optimized for social platforms, but you're responsible for ensuring content meets each platform's community guidelines, copyright rules, and advertising policies. Finally, generation time scales with complexity. A simple 30-second AI talking avatar video with straightforward visuals generates quickly. A complex 3-minute video with multiple scene types, custom assets, and intricate motion graphics takes longer. Plan your workflow accordingly, especially when batch-generating content under tight deadlines.