Digital Human Video Maker

Agent Opus is a complete digital human video maker that transforms text into professional avatar videos in minutes. Describe your message or paste a script, and our AI generates a finished video with a realistic digital human presenter, voice, motion graphics, and social-ready formatting. No timeline, no editing, no video production experience required. From product demos to training content, create engaging digital human videos that connect with your audience instantly.

Explore what's possible with Agent Opus

Reasons why creators love Agent Opus' Digital Human Video Maker

No Studio, No Problem

Create professional presenter videos from your desk without cameras, lighting, or location scouting.

Studio Quality, No Studio

Skip location rentals and equipment costs while delivering professional-grade videos that look like you hired a full crew.

Scale Without Burnout

Produce dozens of videos weekly without hiring presenters, booking shoots, or sacrificing your creative energy.

Confidence in Every Upload

Publish knowing your digital human presenter delivers flawless takes every time, with no retakes or performance anxiety.

Launch-Ready in Minutes

Skip weeks of production and deliver polished digital human videos the same day you need them.

Confidence in Every Frame

Deliver flawless takes every time without worrying about lighting, makeup, or camera anxiety.

How to use Agent Opus’ Digital Human Video Maker

1

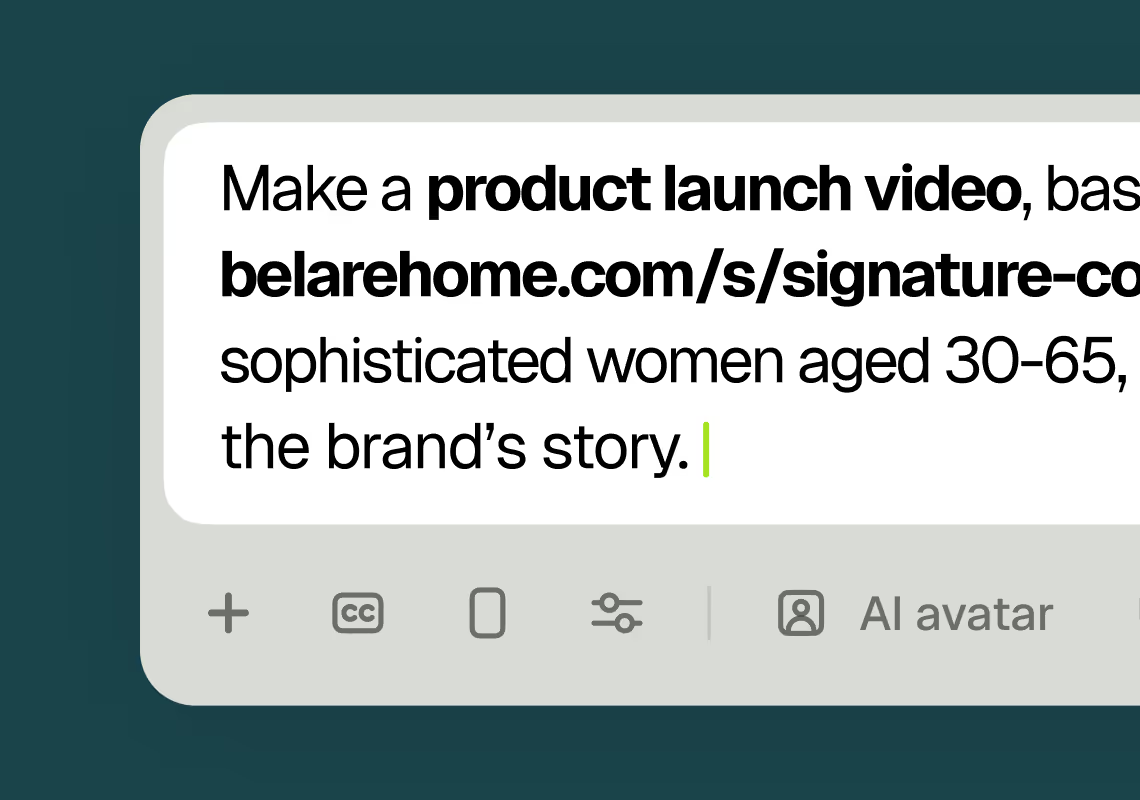

1Describe your video

Paste your promo brief, script, outline, or blog URL into Agent Opus.

2

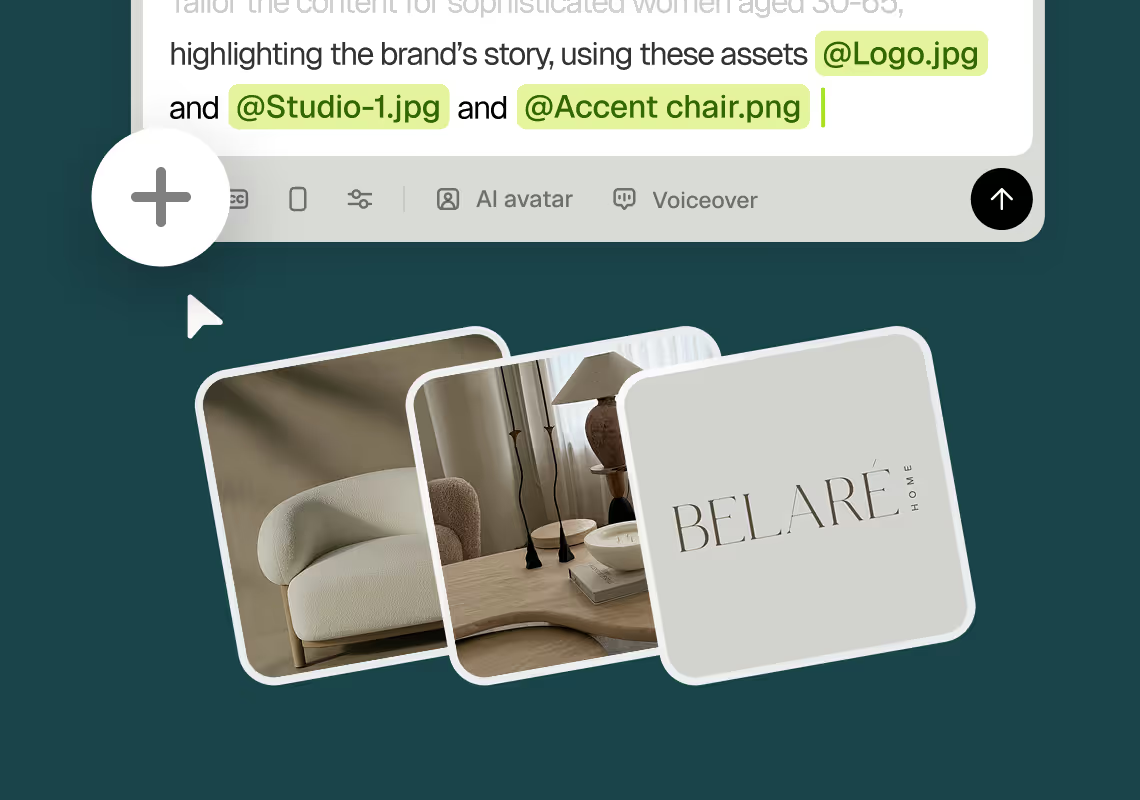

2Add assets and sources

Upload brand assets like logos and product images, or let the AI source stock visuals automatically.

3

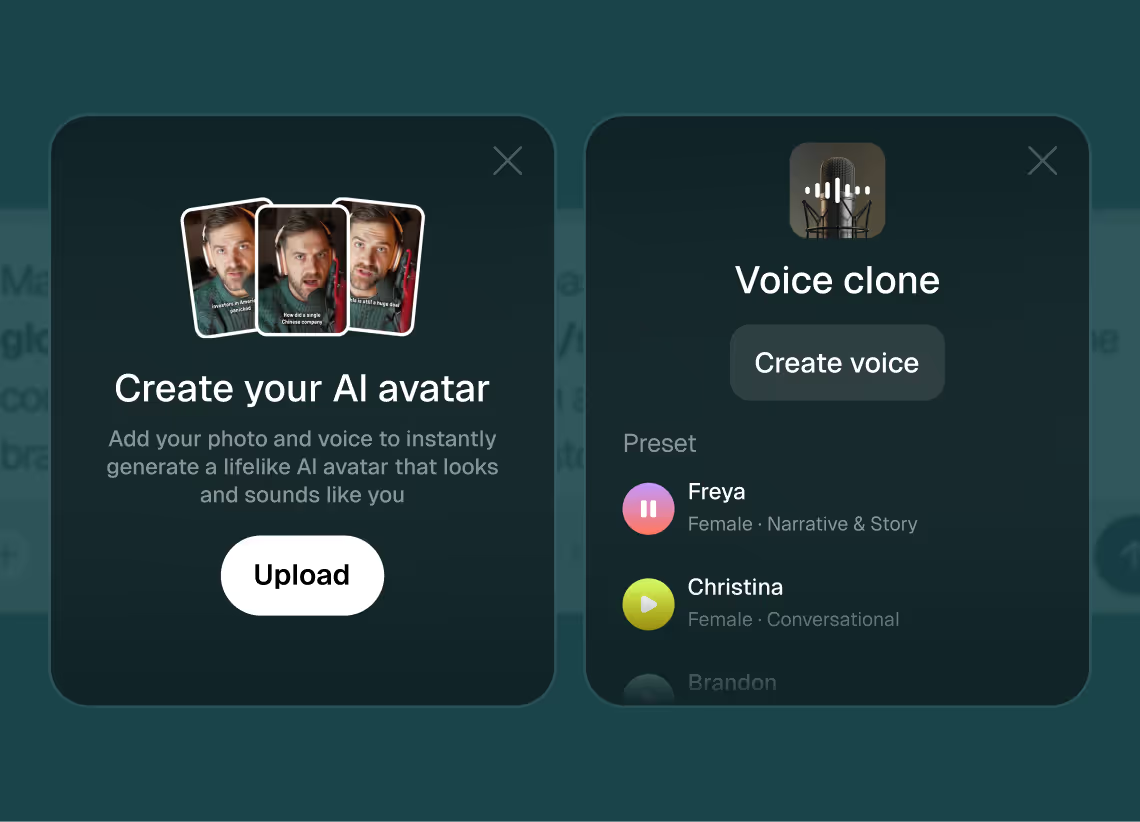

3Choose voice and avatar

Choose voice (clone yours or pick an AI voice) and avatar style (user or AI).

4

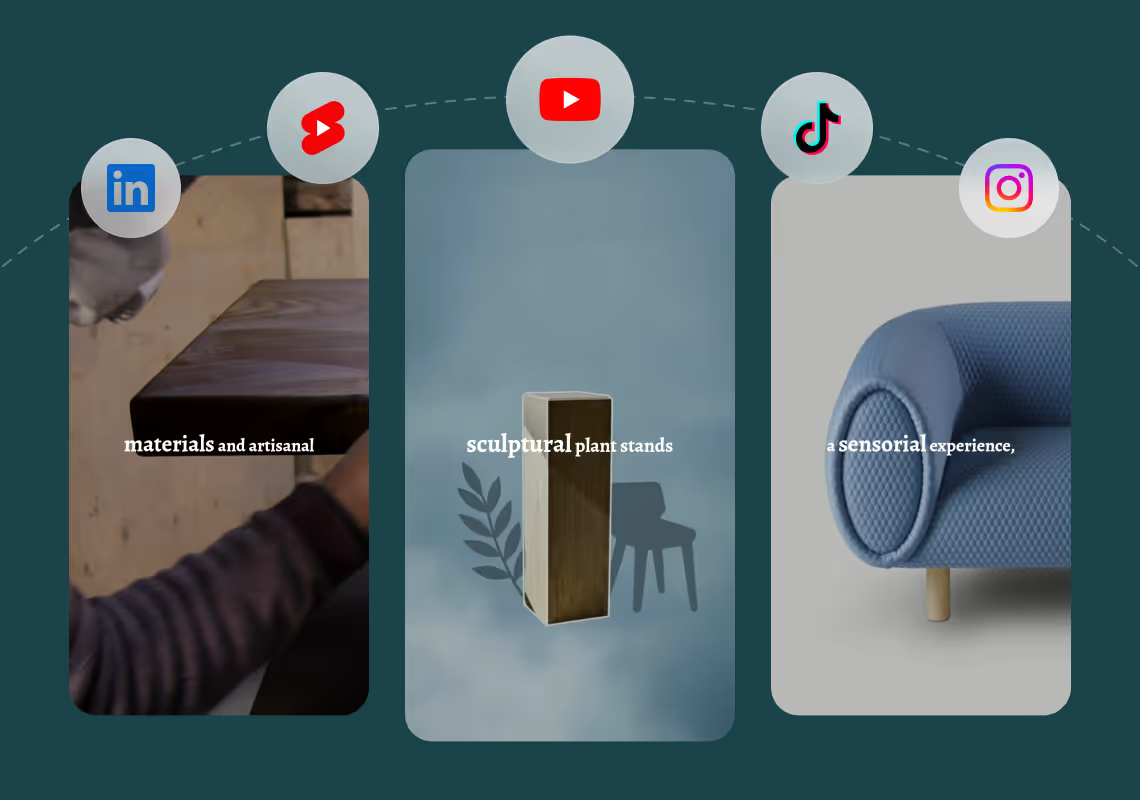

4Generate and publish-ready

Click generate and download your finished promo video in seconds, ready to publish across all platforms.

8 powerful features of Agent Opus' Digital Human Video Maker

Lifelike Digital Avatars

Generate photorealistic digital humans that speak your script with natural expressions and gestures.

Instant Video Generation

Turn text prompts into polished digital human videos in minutes without filming or actors.

Consistent Brand Presenter

Use the same digital human across all videos to build recognition and trust.

Professional Voice Synthesis

Pair your digital human with studio-quality AI voices that sound natural and engaging.

Multilingual Avatar Speech

Create digital human videos in dozens of languages with authentic pronunciation and lip-sync.

Custom Avatar Creation

Build branded digital humans with unique appearances, clothing, and personalities for your videos.

Script to Screen Automation

Transform written scripts into complete digital human presentations without manual production work.

Emotion-Driven Delivery

Control your digital human's tone and emotional range to match your message perfectly.

Testimonials

Awesome output, Most of my students and followers could not catch that it was using Agent Opus. Thank you Opus.

Wealth with Gaurav

This looks like a game-changer for us. We're building narrative-driven, visually layered content — and the ability to maintain character and motion consistency across episodes would be huge. If Agent Opus can sync branded motion graphics, tone, and avatar style seamlessly, it could easily become part of our production stack for short-form explainers and long-form investigative visuals.

srtaduck

I reviewed version a and I was very impressed with this version, it did very well in almost all aspects that users need, you would only have to make very small changes and maybe replace one of 2 of the pictures, but even saying that it could be used as is and still receive decent views or even chances at going viral depending on the story or the content the user chooses.

Jeremy

all in all LOVE THIS agent. I'm curious to see how I can push it (within reason) Just need to learn to get the consistency right with my prompts

Rebecca

Frequently Asked Questions

How does a digital human video maker handle different script lengths and presentation styles?

Agent Opus adapts the digital human presentation dynamically based on your script length and content type. For short promotional messages under 60 seconds, the system generates punchy delivery with faster pacing and energetic avatar gestures that work well on social platforms. For longer educational content or product demonstrations running three to five minutes, the AI adjusts the avatar's body language to include natural pauses, thoughtful expressions, and varied gestures that maintain viewer attention without feeling rushed. When you input a detailed script with multiple sections, Agent Opus automatically segments the content and varies the digital human's positioning, background elements, and visual emphasis to create natural chapter breaks. The system analyzes your script's tone markers and adjusts the avatar's facial expressions and vocal inflection accordingly. A sales pitch receives confident, persuasive delivery, while training content gets a more measured, instructional approach. You can also specify presentation preferences in your initial prompt, such as asking for a casual conversational style versus a formal corporate tone, and the digital human video maker will calibrate avatar performance, voice pacing, and visual treatment to match. This intelligent adaptation means you're not locked into a single template or forced to manually adjust timing and delivery across different video types.

Can I use my own face and voice as the digital human, or am I limited to AI-generated avatars?

Agent Opus supports both AI-generated digital humans and custom avatars created from your own video footage or photos. For voice, the platform includes advanced voice cloning that captures your unique vocal characteristics, accent, speaking rhythm, and tonal range. You simply upload a few minutes of clear audio samples, and the system builds a voice model that sounds authentically like you across any script. This is particularly valuable for founders, subject matter experts, and brand ambassadors who want their personal presence in videos without recording every version manually. For the visual avatar, you can upload reference footage of yourself presenting to camera, and Agent Opus will generate a digital human that matches your appearance, mannerisms, and on-camera style. The system learns your natural gestures, head movements, and facial expressions to create realistic performances for new scripts. Alternatively, if you prefer not to use your own likeness, the platform offers a library of professional AI-generated digital humans with diverse appearances, ages, and presentation styles. You can select an avatar that aligns with your brand personality and target audience. Many users combine a custom voice clone with an AI-generated avatar to maintain vocal authenticity while keeping visual flexibility. The digital human video maker handles lip sync automatically regardless of which combination you choose, ensuring the avatar's mouth movements, facial expressions, and head positioning stay perfectly synchronized with the audio throughout the entire video.

What types of visual content can a digital human video maker incorporate beyond the avatar itself?

Agent Opus builds complete video compositions that extend far beyond a talking head. The digital human video maker automatically sources and integrates relevant visual elements based on your script content and brand assets. When you mention specific products, concepts, or topics in your script, the AI searches royalty-free stock libraries and web sources to find appropriate images, graphics, and video clips that illustrate those points. You can also upload your own brand assets like logos, product photography, screenshots, infographics, and marketing materials, which the system intelligently weaves into the video at contextually appropriate moments. The platform generates custom motion graphics and animated text overlays that emphasize key messages, display statistics, or highlight important terms as the digital human discusses them. These aren't static lower thirds; they're dynamic visual elements that appear, animate, and transition in sync with the avatar's speech and gestures. Background environments adapt to your content type as well. A corporate message might place the digital human in a modern office setting with subtle branded elements, while a product demo could show the avatar against a clean backdrop with the product featured prominently alongside them. The AI handles layering and composition automatically, ensuring the digital human remains the focal point while supporting visuals enhance rather than distract from the message. For training and educational content, the system can display diagrams, process flows, and step-by-step visuals that the avatar references and explains. All of these elements are assembled with professional timing, transitions, and visual hierarchy without requiring any manual editing or motion graphics skills on your part.

How does the digital human video maker ensure natural-looking avatar movements and avoid the uncanny valley effect?

Agent Opus uses advanced AI models trained on thousands of hours of professional presenter footage to generate natural, human-like avatar behavior. The system doesn't simply animate a static face; it creates full-body digital human performances with subtle weight shifts, natural breathing patterns, varied eye contact, and contextually appropriate gestures. When the avatar makes a point, you'll see hand gestures that match the emphasis in the voice. During transitions between topics, the digital human shifts posture slightly, just as a real presenter would. Facial expressions respond to the emotional content of the script, with micro-expressions like raised eyebrows during questions or slight smiles during positive statements. The AI specifically avoids robotic patterns by introducing natural variation. The avatar doesn't gesture on every sentence or maintain perfectly rigid posture. Instead, it incorporates the small imperfections and asymmetries that make human movement feel authentic, like occasionally looking slightly off-camera before returning focus, or adjusting stance between major points. Lip sync goes beyond basic mouth shapes to include realistic tongue and teeth visibility, jaw movement, and the subtle facial muscle engagement that happens during speech. The digital human video maker also calibrates avatar behavior to match voice characteristics. A confident, energetic voice gets more dynamic gestures and animated expressions, while a calm, authoritative voice receives more measured movements and steady eye contact. Lighting and rendering quality play a crucial role as well; Agent Opus uses high-fidelity rendering that captures skin texture, natural shadows, and realistic eye reflections rather than the flat, overly smooth appearance that triggers uncanny valley reactions. The result is a digital human that viewers perceive as a professional video presenter rather than an obvious AI creation.